Heart rate: 70 beats per minute

Arterial blood pressure: 81/40

Non-invasive blood pressure: 94/50

Temperature: 35 degrees Celsius

We’re ready for positioning,” the anesthesiologist, Dr Usha Shenoy, tells Dr N.I. Kurien, professor and head of department, neurosurgery, at Jubilee Mission Medical College and Research Institute in Thrissur, Kerala. There are around nine surgical staff in the operating room. The silence is punctured by the steady beep of the monitor. The patient is a 20-year-old man who presented with headache, vomiting and balance problems two months ago. The CT scan showed a mushroom-like tumour compressing the medulla oblongata or the lower part of the brainstem containing control centres for the heart and the lungs. Damage to this area can affect your blood pressure, breathing and consciousness. The body is positioned so that the head is turned to one side. A part of the scalp, where the incision is going to be made, is marked, the hair shaved off and an anti-microbial solution applied to make the area sterile.

The operation starts. A radio plays in the background, belching out melodic Malayalam songs. An incision, the shape of a rectangle, is made, exposing the bone, which is slowly chipped away. With a click, like a lock falling into place, each piece of bone eases off. Beneath lies the dura which is the thick membrane that surrounds the brain. It is slowly prised open to expose a greyish small blob: the cerebellum.

“When you do a brain surgery, you have to intimately know the safe passages of the brain,” Kurien tells me. “Either you approach the brain through the safe region, or you retract or push back parts of the brain.”

In this case, the cerebellum is retracted. Through the microscope, I see a gently-sloping, pulsating wall of red and, to one side, white flakes dotting the landscape. The flakes are the tumour. Kurien, using a suction tube, sucks out the blood and cerebrospinal fluid and uses a saline solution to moisten the brain and wash away the clots. Although the tumour is not situated too close to the brain, and hence is easier to remove without damaging the brain, the operation is extremely risky because there are intricate blood vessels criss-crossing the region. Disturbing them can cause permanent damage to the patient.

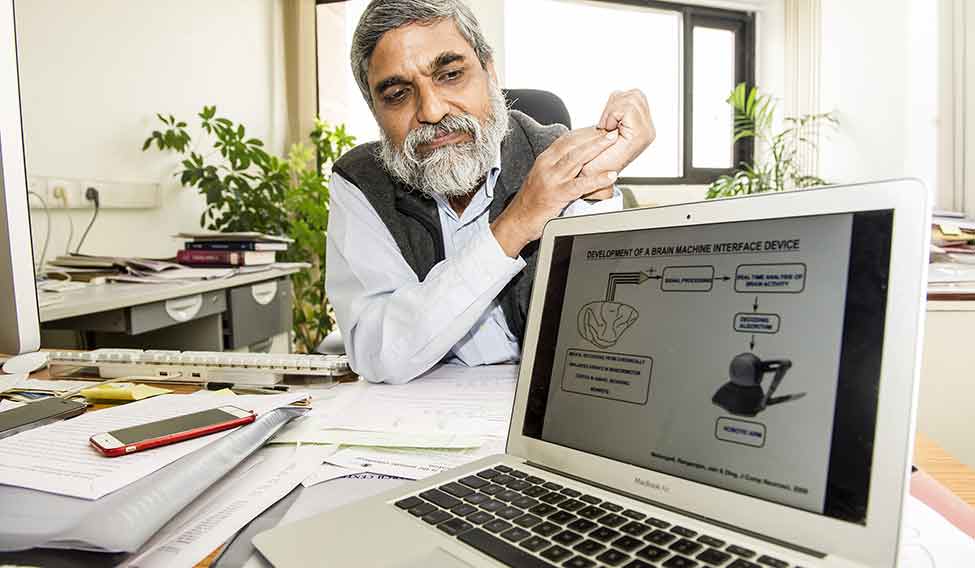

Prof Neeraj Jain, neuroscientist at the National Brain Research Centre in Manesar, Haryana

Prof Neeraj Jain, neuroscientist at the National Brain Research Centre in Manesar, Haryana

I watch Kurien slowly and meticulously sucking out and, later, removing the tumour with forceps. And then I focus on the pulsating wall of red: the brain. It is amazing to think that this clump of tissue and membrane and salty liquid that fits inside your skull and uses 20 watts of power is responsible for every thought, every pleasure, every memory and every experience of yours. Billions of neurons firing inside this organ is responsible for constructing the sense of reality in which you live. The brain builds your world; it gives you the sense of you.

For centuries, scientists have been trying to decode it. If we use only 10 per cent of the brain, then what could we do if we could use the brain to its full capacity? Could we assimilate and retain information in encyclopaedias within mere seconds as in Barry Levinson’s film Rain Man? Could we control the world using a telepathy helmet like Magneto in X-Men? Could we erase painful memories as in the film Eternal Sunshine of the Spotless Mind? Will we be able to transfer consciousness into an alternate reality as in The Matrix? Will we be able to move objects with the power of our thoughts like Carrie in Stephen King’s novel?

Easy drive: A Ploud.io team member shows the prototype of a helmet that could be used in the future to control the movements of a car with the mind.

Easy drive: A Ploud.io team member shows the prototype of a helmet that could be used in the future to control the movements of a car with the mind.

Daniel Gilbert, in his book Stumbling on Happiness, puts forward an interesting theory: he says the biggest achievement of the human brain, the reason we have a bigger and more developed frontal lobe or prefrontal cortex than other mammals, is that it can imagine the future. “To see is to experience the world as it is,” he writes. “To remember is to experience the world as it was, but to imagine—ah, to imagine is to experience the world as it isn’t and has never been, but as it might be.” That’s why science fiction is so important; it helps us imagine the world of the future. And it might not be so far-fetched either. If not telepathy helmets, we have discovered synthetic telepathy or brain-to-brain communication through a computer. Brain-machine interfaces are helping the paralysed regain function of their limbs. Robots are assisting doctors perform complicated surgeries and beating grandmasters in chess. Big companies are introducing self-driving cars on the roads. Smart mice are roaming around with boosted memory. In many ways, we are steamrolling into the future we have imagined.

Evolution has designed various ways for humans to communicate with each other, including language, behaviour, gestures and facial expressions. But these methods are only partial; we often make mistakes in interpreting what the other person says. But what if you could directly communicate with your thoughts? What if you could connect one brain to another so that thoughts, in the form of electrical impulses, can be decoded and transferred to the brain of another person?

In August 2014, two words—‘hola’ and ‘ciao’ (hello and goodbye in Italian)—were communicated between researchers sitting in Kerala and Strasbourg, France. The researcher sitting in Kerala wore an electro-encephalogram (EEG) helmet and imagined he was making a series of either horizontal or vertical movements corresponding to the Italian words. This was translated into digital binary code and sent via the internet to the researchers sitting in France who were connected to a machine that converted the code into currents of electricity. This appeared to the researchers as dots of light known as phosphenes which could be converted into binary code and later, decoded into the words ‘hola’ and ‘ciao’.

“Pseudo-random binary streams encoding words were transmitted between the minds of emitter and receiver subjects separated by great distances, representing the realisation of the first human brain-to-brain interface,” wrote the researchers in a paper published in PLoS-ONE magazine. “We envision that hyperinteraction technologies will eventually have a profound impact on the social structure of our civilization.”

Raghu Venkatesh (in blue shirt), founder, Ploud.io, a Bengaluru-based startup

Raghu Venkatesh (in blue shirt), founder, Ploud.io, a Bengaluru-based startup

Most words are a combination of thought and feeling. However, language is often inadequate to express what you think and feel when you speak or describe the experience. Imagine you are looking at a beautiful valley strewn with flowers the colour of sunshine. Beyond “amazing” and “wow”, it is impossible to vocalise what you feel on seeing the valley. But what if you can? If you can extract words directly from the brain instead of articulating them, can you capture the feeling associated with those words? In other words, can you transfer an experience to another person? Can you transfer a memory? Can you live in another person’s brain?

“All this won’t be possible anytime in the near future,” says Professor Neeraj Jain, a neuroscientist at the National Brain Research Centre in Manesar, Haryana.

He has been working on something called a brain-machine interface for many years now. A brain-machine interface tries to decode the electrical impulses sent by the brain using a computer algorithm to try and restore the function of the limbs of paralysed patients so that they can perform simple tasks like self-feeding or switching on the TV. Around five years ago, he collaborated with scientists at the Indian Institute of Science to devise an experiment in which electrodes were planted in the pre-motor cortex region of monkeys where the movement planning happens. The monkeys were induced to pick up a reward from bowls placed on their left or right side. Their movements were captured by an analyser which decoded the information and a robotic arm moved left or right corresponding to the motion of the monkeys.

“In human beings, too, the basic principle is the same,” says Jain. “But the logistics is more difficult. You need more collaboration and resources and involve neurosurgeons and hospitals. Scientists have achieved this in controlled conditions but the brain-machine interfaces are not elaborate, robust or simple enough for paralysed patients to actually use them at their homes.”

Prof Shiv Kumar Sharma, scientist at the National Brain Research Centre in Manesar, Haryana

Prof Shiv Kumar Sharma, scientist at the National Brain Research Centre in Manesar, Haryana

He says the biggest hurdle in neuroscience right now is that our algorithms are too primitive to mimic the way in which the brain codes the information. “The information is all there,” he says. “You just need someone to think in a different way. Einstein discovered the theory of relativity in a stroke of brilliance. Someone will decode the brain in the same way.”

A startup based in Bengaluru called Ploud.io is working on something similar. It is trying to build a neuro-VR headset, currently in the proof-of-concept stage, which will be able to access your thoughts so that your intent of action can be intercepted and altered even before the action happens.

“We’ve moved from keyboard to touch but they are both ways of communicating your thoughts,” says Raghu Venkatesh, founder of Ploud.io. “What if you can delete the user interface and directly communicate your thoughts? We are working on a system that will be able to sense your mindset based on the scenario and then formulate an algorithm that will get the output.”

Why virtual reality? Because the team at Ploud believes that virtual reality is the future and its maturing will coincide with the decoding of brain signals. “Everyone thinks VR is virtual reality,” says Fazil V.N., design head. “No one is focusing on the second world that comes after virtual reality. How real can you make a world inside virtual reality?”

“Imagine a social network like Facebook,” says Joshua Abraham, in charge of sales and strategy at Ploud. “Instead of putting up posts and viewing pictures, what if you could actually walk into Facebook and communicate directly with your friends? Or take the field of education. Instead of learning about plant cells from a textbook, what if you could walk into a VR plant and witness how the cells interact and work so that the information will be retained in your brain forever? What if you could interact with the characters in a movie that you are watching?”

Or what if you could induce emotion in the viewer while watching a film by attaching electrodes to his brain? Alfred Hitchcock envisioned this in the 1950s. During the shooting of North by Northwest in 1959, he told his scriptwriter Ernest Lehman that he would love to access the spectator’s emotions directly. “The audience is like a giant organ that you and I are playing,” he said. “At one moment we play this note, and get this reaction, and then we play that chord and they react. And someday we won’t even have to make a movie—there’ll be electrodes implanted in their brains, and we’ll just press different buttons and they’ll go ‘oooh’ and ‘aaah’ and we’ll frighten them and make them laugh. Won’t that be wonderful?” Neuro-thrillers like Lars von Trier’s Melancholia, today, are making his prediction come true by tapping into the brain directly to evoke emotion in the viewer, writes Patricia Pisters in Aeon. The melancholic colours of the setting, the dark music and the slumberous movements of the protagonist are designed to directly stimulate the brain even before the narrative begins.

And if the brain can be stimulated, can some of its functions—like intelligence and memory—be boosted? The first step towards the development of a memory-enhancing pill in India was taken by Professor Shiv Kumar Sharma, a scientist at the National Brain Research Centre, and his team last year, when they conducted experiments to prove that the memory power of rats could be boosted by injecting them with a compound called sodium butyrate. A fundamental feature of memory formation is that space training, or being made to learn something by repeating the process of memorising it at long intervals, takes less time than mass training, or repeating the process of memorising it at shorter intervals, to help you commit what you are learning to memory. Rats who were mass-trained, when administered sodium butyrate, were able to memorise the same thing as fast as rats who were space-trained. So suppose you are cramming lessons at the last minute to prepare for an exam, this compound might help you retain those lessons as effectively as if you were memorising them with longer time intervals. The experiment might prove that long-term memories can be formed and altered through gene modification.

Deekshith Marla, co-founder of Arya.ai, a Mumbai-based artificial intelligence startup

Deekshith Marla, co-founder of Arya.ai, a Mumbai-based artificial intelligence startup

“We are not yet ready to conduct the experiment on humans, as this compound could change many other things in the body,” says Sharma. “The side effects could be harmful.”

Instead of directly boosting human intelligence, can we transfer intelligence to a machine? Artificial intelligence, or the simulation of human intelligence processes by machines, might be more prevalent than you think. As Pedro Domingos writes in his book The Master Algorithm, machine learning is all around you. Machine learning is a way for computers to know things by following certain rules that their programmers have not specified. When you read your email, you don’t see most of the spam because machine learning filtered it out. The machine learning systems at Amazon and Netflix make suggestions on which book to read or movie to watch based on your preferences. Facebook uses machine learning to decide which updates to show you. Pandora learns your taste in music using machine learning. Mutual funds use learning algorithms to help pick stocks. Your cell phone uses machine learning to correct your typos, understand your spoken commands and recognise bar codes. “Society is changing one learning algorithm at a time,” Domingos writes. “Machine learning is remaking science, technology, business, politics and war.” The interesting thing is that, till now, no one has really figured out what happens inside a machine when it is learning a task. “At best, we’re left with the impression that learning algorithms just find correlations between pairs of events, such as googling ‘flu medicine’ and having the flu,” writes Domingos. “But finding correlations is to machine learning no more than bricks are to houses, and people don’t live in bricks.”

A sub-field of machine learning is deep learning where programmers use neural networks, or simulations of the way neurons interact with each other in the brain, to copy the tasks that the brain is able to do. Despite research on neural networks starting in the 1950s, the best neural networks developed today are extremely crude versions of the neural networks in the brain, mainly because a neuron is an incredibly complex nerve cell that is difficult for transistors inside the computer to simulate. But there has been some progress. Now that vast tracts of information or data are available, the computational power of machines has exploded so that deep learning algorithms are able to make sense of this data much better than traditional algorithms. Deekshith Marla, co-founder of Arya.ai—a Mumbai-based artificial intelligence startup that became the first Indian startup to be selected by Paris&Co, a French innovation agency, as one of 21 companies globally that do standout innovations—gives an example: “Imagine a hill station coffee shop owner orders beans every morning depending on the temperature. He has lots of data derived from his history of ordering beans to help him predict what quantity of beans needs to be ordered every day. Big Data algorithms recommend that he order more beans when there is a drop in temperature, even when there is heavy snow, as there will be more demand for coffee that particular day. However, deep learning algorithms consider all parameters and recommend that the owner doesn’t buy more beans as the heavy snow will deter customers from stepping out of their homes.”

Arya.ai, founded in 2013, works with developers across travel, research, e-commerce, customer care, medicine and banking sectors. Marla was picked this year as one of Forbes’ 30 under-30 Asians in consumer technology.

“Deep learning as a field is quite promising and will continue to evolve over the next decade as we continue to learn and combine ideas from different fields such as statistical learning, Bayesian reasoning, neuroscience and maybe philosophy, too,” says Navneet Sharma, co-founder of the AI company SnapShopr, which has designed an image recognition software that will help you search the entire online catalogue of a retail shop using images instead of text. “It will disrupt every industry you can think of and the world should probably start preparing for that. Retail, medical, education, energy, transport, security—all will be impacted. Not at once but gradually and it’s very likely that AI powered by current and upcoming deep learning algorithms will become such a part of our society that we will not notice if it’s there at all.”

It seems this is already happening. AI could do to white collar jobs what steam power did to blue collar ones. In 2013, two Oxford academics published a paper claiming that 47 per cent of American jobs are at high risk of getting automated in the next 20 years. Take the field of business journalism. The startup Narrative Science uses artificial intelligence to sort through data and compile business reports. It claims to communicate the insights buried in Big Data through “intelligent narrative in-distinguishable from a human written one” that you can comprehend, act on and trust. Artificial intelligence is also making inroads into sectors like e-commerce, real estate, financial services and health care. Even a creative field like art is not exempt from its ambit. Recently, Google’s algorithms created artworks for an exhibit in San Francisco that imitated the work of artists like Vincent van Gogh. The most expensive image was sold for approximately Rs 5.37 lakh.

Rajeev Pathak, CEO of FunToot, a Bengaluru-based artificial intelligence startup

Rajeev Pathak, CEO of FunToot, a Bengaluru-based artificial intelligence startup

An AI startup called FunToot, based in Bengaluru, has developed a software that it calls an intelligent personal tutor. It mimics the functions of the teacher by teaching difficult concepts to children. By understanding the background, interests, gender and mental ability of the child, the system is able to personalise its teaching to each individual child. In the future, says Rajeev Pathak, CEO of FunToot, machines will be able to recognise the mood of the child based on body language and facial expressions and accordingly change the way they teach the child. There will be a free interface between the child and the machine for spoken communication. Interaction will be through speech, like how teachers interact with students, rather than text. Machines will be able to understand and assess subjective, non-logical answers by children. For example, if the machine asks a child to briefly explain India’s freedom movement, it is an extremely subjective question, the answer of which it might not be able to assess accurately today.

According to a paper published by consulting firm Gartner, we have migrated from physical/ virtual keyboard to touch and in the future, users will move to voice command. By 2020, virtual personal assistants “will facilitate 40 per cent of mobile interactions”. VPAs will be linked to apps so that you won’t have to exit an app to engage a VPA. If you read the review of a movie on a particular site, the VPA will be able to book tickets for the movie without you having to visit the movie booking website.

But AI has its limitations. The data available for machine learning algorithms is huge but it is not infinite. Hence, the potential of these algorithms, too, is limited. The future of AI could be the development of algorithms that can learn without human assistance. Domingos, in his book, talks about a “master algorithm”, the discovery of which can derive all knowledge in the world—past, present and future—from data. It would change the world in ways we can barely begin to imagine. It could solve some of the hardest problems we face, like curing cancer. Domingos says it will be a more complicated version of the searches that Amazon and Netflix do every day, except that it is looking for the right treatment for you instead of the right book or movie.

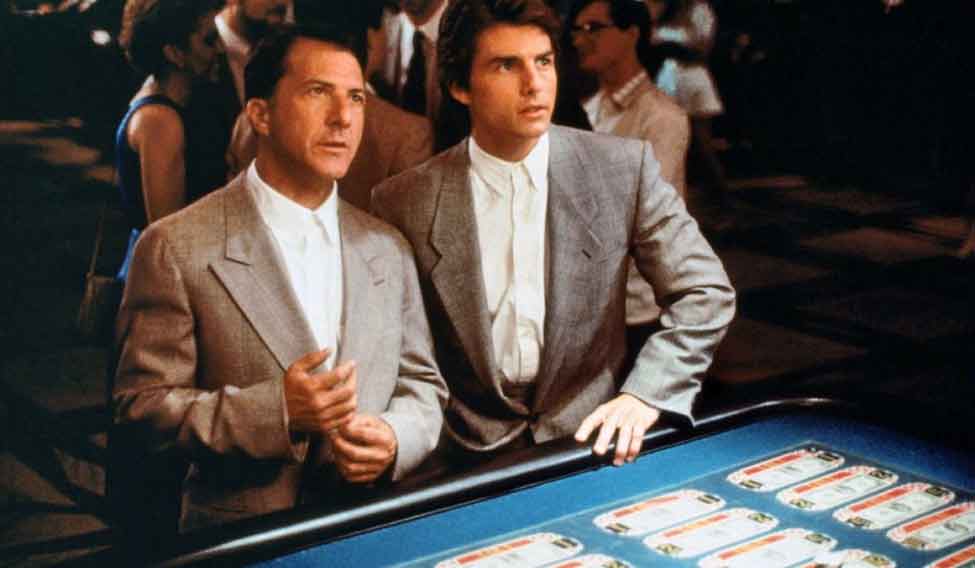

Could we assimilate and retain information in encyclopaedias within mere seconds as in Barry Levinson’s film Rain Man (above)? Could we control the world using a telepathy helmet like Magneto in X-Men? Could we erase painful memories as in the film Eternal Sunshine of the Spotless Mind?

Could we assimilate and retain information in encyclopaedias within mere seconds as in Barry Levinson’s film Rain Man (above)? Could we control the world using a telepathy helmet like Magneto in X-Men? Could we erase painful memories as in the film Eternal Sunshine of the Spotless Mind?

If we are able to devise a master algorithm that will allow a machine to function without human intervention, will we really be able to trust such a machine? Are we moving towards a future in which robots will become more powerful than humans? Jayakrishnan T., CEO of a robotics company called Asimov Robotics in Kerala, doesn’t think so. “A robot can only excel in one vertical,” he tells me. “So if I ask it to get me a mosquito net for this room, it will get me a mosquito net large enough to cover the entire city. It does not have the consciousness to assess a situation subjectively.” Then he tells me something else. “A robot can mimic the personality of human beings—which is our external characteristics—but it can’t mimic the person, which is something unique to you or me. It is what makes us us.” I ask him what he means but he can’t. “I can’t explain it,” he says. “But I know it.”

In a small way, I understand what he means. In 1974, a philosopher called Thomas Nagel published an essay called What Is It Like To Be A Bat?

We can imagine having webbing in our arms that will enable us to fly around at dusk and dawn catching insects in our mouths. Or having poor vision and perceiving the surrounding world by a system of reflected high-frequency sound signals. Or spending the day hanging upside down by our feet in an attic. But we will never be able to understand the experience of being a bat, its consciousness or its point of view. “Insofar as I can imagine this (which is not very far),” he wrote, “it tells me only what it would be like for me to behave as a bat behaves. But that is not the question. I want to know what it is like for a bat to be a bat.”

Robots are assisting doctors perform complicated surgeries and beating grandmasters in chess. Big companies are introducing self-driving cars on the roads. Smart mice are roaming around with boosted memory. In many ways, we are steamrolling into the future we have imagined.

Robots are assisting doctors perform complicated surgeries and beating grandmasters in chess. Big companies are introducing self-driving cars on the roads. Smart mice are roaming around with boosted memory. In many ways, we are steamrolling into the future we have imagined.

In other words, the batness of a bat is something that is unique to the bat. Similarly, can a robot really understand the personness of a human being? Isn’t that an experience that is unique to us?

The more we know, the more we understand how little we know, best summarised by Plato’s allegory of prisoners chained in a cave, unable to turn their heads. The puppeteers, who are behind the prisoners, hold up puppets that cast shadows on the wall of the cave. The prisoners can’t see the puppets but they see the shadows of the puppets on the wall. Such prisoners would mistake appearance for reality. They would think the things they see on the wall (the shadows) were real; they would know nothing of the real causes of the shadows.

Are we also mistaking appearance for reality? When we look at something, the photoreceptors in our eye detect the light and convert it into electrical impulses that are sent to the brain. But there are no photoreceptors at the area near the back of your eye where the optic nerve starts. This means that there should be a gaping hole in our field of vision but the brain fills the hole and gives the illusion of looking at something that is smooth and continuous. If the brain is playing tricks on us, is it also silently mocking our attempts to unravel its mysteries?