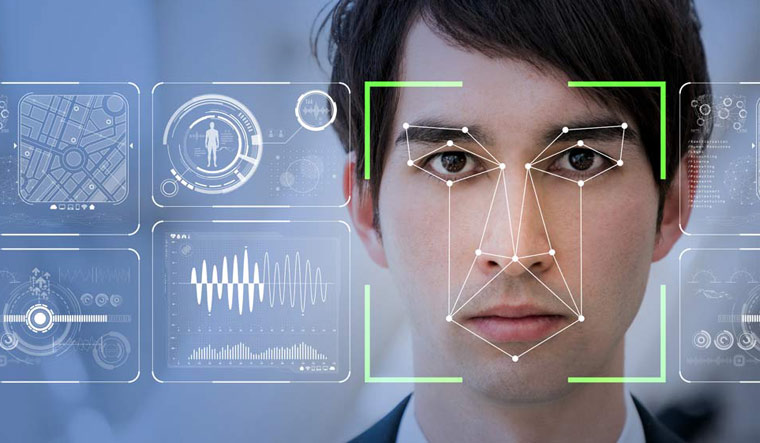

Last September, the world heard of CUBIC’s ‘fastrack gateless gateline’, a system that uses facial recognition in place of now existent smart cards in London’s Cube. Soon came Apple with their new Face ID technology that replaced the biometric lock on the phone. Security concerns were soon forgotten as the tech giant claimed how the new technology was more efficient than the existing biometric security.

Face ID meant doing less—just looking necessitated the process. The smart card cried with the finger as the face dawned onto the scan of IR rays. The use of infrared sensors means the system couldn’t be fooled by a 2D image. That said, instances where the iPhone X’s face ID failed have floored the internet. But, commercial facial recognition technology is bound to be more efficient, or is it not?

Biometric scans once established, soon became a standard in commercial sphere. Lately, personal devices too, absorbed this technology—all in the name of privacy and security. There had been countless debates of “what someone has” vs “something one knows” on how biometrics were not on the same plane as passcodes. At the same time, intelligence bureaus and central policing units have been successfully using biometrics, in addition to the traidtional means of identifications. These ways always included photographs, but they were monitored and matched manually.

Facial recognition has begun to be rolled out in a few countries like the US, China and the UK. Often, they command a certain degree of surveillance—one that the average person might not be aware of. There have been instances where the Chinese officials used facial recognition and nabbed the wrong individuals. There are articles on how the US and the UK are on a non-sanctioned spree using automatic face recognition, and are failing. Proper laws have not been laid out for such technology and the scope of their misuse are only painted in fiction.

Public spaces are beginning to be dominated by what is often called, an “offensively crude” technology. Recently, GovTech, a Singapore government agency, notified what's called a 'Lamppost-as-a-Platform', a pilot project that is scheduled to begin next year. The programme will see more than 1,00,000 lampposts to be mounted with cameras equipped with back-end facial recognition, along with sensors continually monitoring the air, noise, and surroundings.

The world has had a glimpse of how this technology fails, but is unaware of how it could be misused. If the data on one’s facial structure is compromised, it is almost impossible to restructure the face. If the system that controls it is compromised, it might bring down an entire nation.

And hence, while most governments get carried away by the “greatness” of such systems, questions remain about their security. The recent data breach dealt a huge blow to such 'assumed security', with even the big blue social media giant Facebook failing to give convincing answers.

A fictional account of the compromise of such facial recognition-enabled state and the subsequent disasters are well-portrayed in Ubisoft’s Watchdogs. Why are people not been adequately informed of the risks? Have the risks been calculated by the creators? Or will it dawn on us all as some surprise of the greatest order? Governments and companies across world, who are hurrying to adopt the facial recognition technology, will have to answer at least a few of these questions before thrusting it on people.