The father of modern computing and World War II hero, Alan Turing, presented his seminal paper on artificial intelligence (AI) in 1950. The paper opens with these words: ‘I propose to consider the question: Can machines think?'

Turing’s contemplations would set the stage for a technological revolution that is now transforming society positively, but is also bringing forth unforeseen challenges. Writing about it, tech researcher Nirit Weiss-Blatt described 2023 as the Year Of AI Panic. Even though AI and machine learning have been around for a couple of decades, things began to change in June 2017.

THE NEW WORLD ORDER OF AI

The world saw a paradigm shift when a bunch of Google engineers published, ‘Attention Is All You Need’―an oddly titled paper, proposing a new form of machine learning architecture called ‘transformer’. This paper paved the way for the dominance of large language models. Generative AI took centrestage replacing good old-fashioned symbolic AI.

This newfangled set of algorithms fundamentally changed AI training models. Earlier, the problem with AI was the knowledge―you needed to feed enough rule-based knowledge to a machine about a subject for it to make intelligent decisions. Today, all you need is an enormous amount of data sets and enough computational power―the lever and fulcrum of the new world order.

This is the story of how we discovered the phenomenon of Emergence in computational data. The phenomenon, which biologists used to explain complex behaviour arising from simple roots, such as swarming of birds and neurons of human brains, is now performing complex machine learning tasks.

To put it simply, a large data set coupled with high computational power will yield machines that are capable of performing tasks with emergent properties. It is a kind of machine learning evolution, a sudden appearance of new behaviour. Large language models (LLMs), such as ChatGPT, display emergence by suddenly gaining new abilities as they grow. The data set behind ChatGPT, the new messiah of the digital world, is nothing but a large set of words; 300 billion to be precise, all scraped from the internet. It is an ever-evolving symphony where the notes of AI weave through the tapestry of constant self innovation.

In an era marked by the rapid evolution of artificial intelligence, it is essential to avoid succumbing to a doomsday fear psychosis that indiscriminately vilifies all aspects of AI. Acknowledging and addressing risks associated with this burgeoning technology is the need of the hour.

AI TRICKERY & DEEPFAKES

Rapid technological advancement is the cornerstone of human civilisation. From rubbing stones to inventing the wheel, technology has played a key role in societal evolution. All previous tech innovations helped us in communicating or propagating our ideas. While AI has the potential to revolutionise our lives positively, it also brings forth a side that is exploited by malicious actors to perpetrate crimes that were once unimaginable.

Deepfakes, a form of AI-based manipulation, have become a significant concern, with far-reaching implications on trust, privacy and security. The recent barrage of deepfake audiovisuals has sent chills across different sections of society. These deepfake multimedia creations, designed to manipulate opinions and perceptions, can have serious consequences. The rise of misinformation, disinformation and cyber scams is evident, leading to financial losses and reputational damage, even strife. According to a recent study by Sumsub, India is among the top targets of deepfake identity frauds. From sextortion to deepfake videos and audios, scammers are already using AI to elevate chicanery to dazzling new heights.

While governments and agencies across the globe are responding to this new theatre of crime, it is still a cat-and-mouse game. From the general public to big corporations and celebs, everyone is still figuring out the best practices to deal with AI enabled frauds, copyright infringements and reputational damages. There is more to this AI trickery than meets the eye. Deepfakes blur the line between reality and fabrication, requiring individuals to invest more effort in discerning the truth. The exhaustion of critical thinking poses a serious threat to society.

One of the primary concerns revolving around deepfakes is the erosion of trust. Seeing is not believing. This erosion of trust has the potential to undermine societal structures, leading to what scholars call reality apathy.

Fabricated narratives have the potential to influence the collective memory of a society, creating collective false memories despite evidence to the contrary, as in a phenomenon known as the Mandela Effect. It was named after the false collective memory of Nelson Mandela's ‘death’ in prison in the 1980s. In the age of AI and deepfakes, the lines between reality and illusion become even more blurred.

The existence of convincing deepfake content can also give rise to what Robert Chesney calls the Liar's Dividend. Accusations based on recordings and videos can be dismissed by claiming that the source material has been manipulated using AI. This situation will be a formidable challenge for polity and society.

THE ROAD AHEAD

Amidst these emerging threats, which will impact not just individuals but society as a whole, the road ahead is littered with uncertainty. All the stakeholders―including governments, tech platforms and the general public―must unite and implement robust measures. Governments are exploring legislative measures to regulate the creation and dissemination of deepfakes. Recent initiatives by the Union government in this direction signal a growing awareness of the need to address the challenges posed by AI-based manipulation. The AI Act of the European Parliament, which will regulate the use of artificial intelligence in the EU, or the 'Kratt' initiative of Estonia, a small Baltic nation, are some good starting points.

Technological efforts should focus on developing tools for flagging AI-based manipulations. Detecting and adding metadata and encrypted watermarking to AI-generated content is already being explored by tech companies and governments around the world. Google’s SynthID is one such tool, which is being developed to provide AI disclosures about any content. In fact, major tech platforms have pledged to add metadata and watermarks in an effort to curb the malafide use of AI generated content.

However, despite some success stories, integrating AI into governance and public policy is still a distant dream. For the general public, education and awareness emerge as the first line of defence against AI-based crimes and manipulation. We need to incorporate media literacy programmes to equip individuals with the skills to critically evaluate the content they encounter. Training programmes that keep pace with technological advancements should be at the forefront now. For law enforcement agencies, the key lies in adaptation, innovation and collaboration. Harnessing the power of AI, not as a weapon but as a shield, is essential.

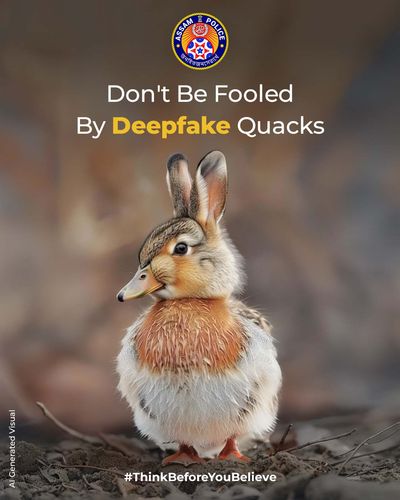

The Assam Police's AI campaign, particularly its positive engagement in raising awareness about deepfakes, demonstrates the potential of AI as a communication tool. The #ThinkBeforeYouBelieve initiative of the Assam Police serves as a notable example of raising awareness about deepfakes targeting children.

There are serious concerns about the privacy and safety of children because of the rising interference of AI in our lives. The emergence of AI-based toys, chatbots and social media has the potential of creating a seismic shift in the social and behavioural development of our children.

Today's children are growing up in a society, which is largely augmented with AI, and their interaction with the world around them has been altered by AI. In a world where reality is just a click away from being reinvented, society must become the guardians of truth, armed with scepticism and a touch of digital Sherlock Holmes.

AI presents unprecedented opportunities for societal advancement. From rapid advancements in the field of medical science to fighting climate change, AI is at the forefront of tech-based interventions in solving global issues. AI systems are now performing top-level scientific research, which will impact millions of lives positively. The field of clinical genomics has been completely transformed by AI’s ability to process large-scale data and predictive modelling.

But it also comes with its fair share of challenges and risks. Instead of succumbing to panic, we need to adopt a more dynamic approach to prepare for and mitigate AI threats. With public awareness initiatives, adequate regulations, fair use clauses and multilateral collaborations, we can navigate the AI maze safely. Perhaps, it is time we applied the principles of Turing’s idea about machines' ability to think on humans. Let's take a moment to think before we react. Let’s prepare, not panic.

―Harmeet Singh is special director general of police, Assam. He is also in-charge of Assam Police Smart Social Media Centre-Nagrik Mitra, where Salik Khan is tech policy and communication consultant.